Our live internet shutdowns index measures the financial consequences in real-time and provides information on previous years' data.

Our live internet shutdowns index measures the financial consequences in real-time and provides information on previous years' data.

This live tracker documents VPN demand spikes around the world and provides context for these increases.

Find all our investigations into the dangers of free VPN apps in one place. We look at ownership issues, security risks and how operators ignore Apple's privacy rules.

VPN vulnerabilities are up 47% in 2023 compared to the past two years, with a 43% increase in confidentiality impact and a 40% rise in severity.

We are tracking the websites officially blocked in Russia by the authorities since the invasion of Ukraine in February 2022 that relate to the conflict.

This annual report analyzes every major deliberate internet shutdown in 2022, revealing the cost to the world economy to be almost $25 billion.

Researchers Valentin Weber and Vasilis Ververis reveal the global reach of Huawei's tech and the U.S. companies that facilitate China's surveillance state.

New vulnerabilities in WiFi software allow attackers to trick victims into connecting to malicious networks and join secure networks without needing the password.

The Index has been tracking the trade in hacked online accounts since 2018. Access all our research in one place and learn how to protect yourself from identity theft.

Latest cybercrime trends include a massive surge in NFT-related hacks and the rise of cryptojacking. IoT malware is also now twice as prevalent in 2022 than last year.

As Chinese electric vehicle exports continue to rise, we investigated the data privacy risks associated with these smart vehicles and their companion mobile apps.

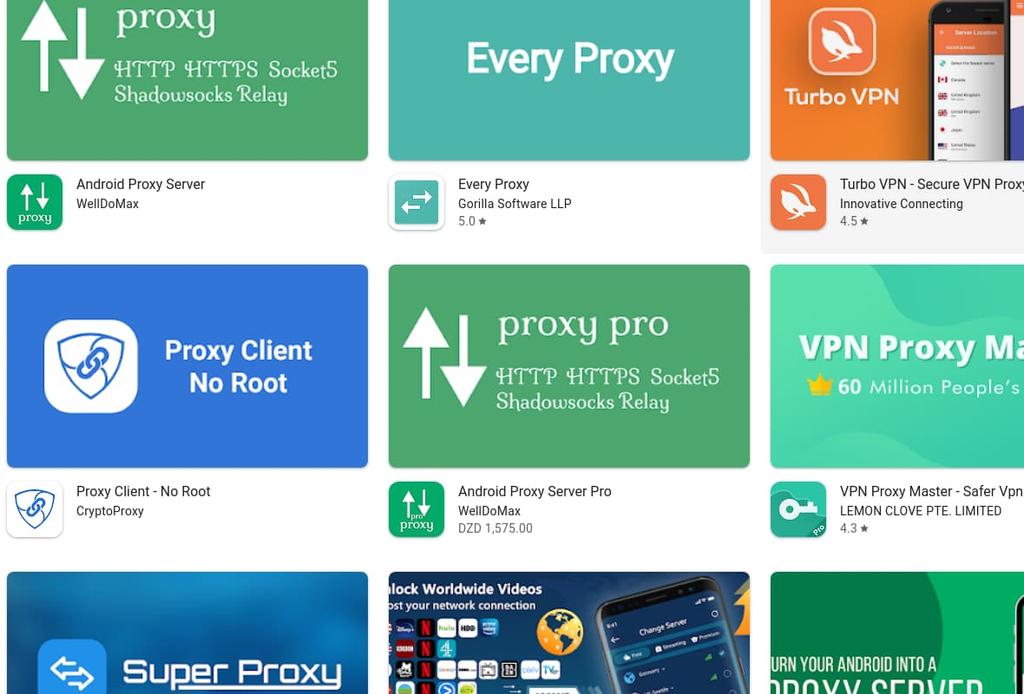

Analysis of 20 of the most popular proxy Android apps reveals nearly 185 million peoples' privacy could be at risk.

We analyzed the 10 highest-ranked unofficial ChatGPT clone apps in each of Apple and Google's app stores and identified the biggest privacy risks.

As millions of people shifted to home working, employers around the world have turned to employee surveillance software to track their employees' productivity.

This live tracker documents new initiatives introduced in response to the pandemic that pose a risk to digital rights around the world.

We identified and mapped 6.3 million surveillance camera networks globally that use hardware from controversial Chinese firms Hikvision and Dahua.

Head of Research

Simon Migliano leads our research and testing into VPN applications, as well as wider investigations into internet privacy and security matters. Utilizing these findings, he produces in-depth VPN reviews and expert guidance on online safety.

His work examining dangerous free VPNs, identity theft, and internet censorship has been featured in over 1,000 publications worldwide including the BBC, CNET, Wired, and the Financial Times.

Digital Rights Lead

Samuel Woodhams leads our research on censorship, surveillance, and internet freedom to help defend internet freedoms around the world.

His research has been featured by the BBC, Washington Post, Financial Times, and The Guardian.