Rise of the ChatGPT Clones

ChatGPT has captured the collective imagination of the internet since its launch in November 2022.

Prompting much hand-wringing about its potential for everything from plagiariazed schoolwork[1] to malware-on-demand,[2] the chatbot based on OpenAI’s language-processing AI model known as GPT-3 was also immediately seized upon for its commercial applications.[3]

The buzz around ChatGPT and AI chatbots in general also created an opportunity for those looking to turn a quick profit. ChatGPT is only officially available via a web browser interface on openai.com, which opened the door for mobile developers to flood app stores with unofficial ChatGPT clones.

While ChatGPT is currently free to use during what’s essentially an open beta phase, many of these clone apps charge users for the convenience of using a native app to access the OpenAI technology on their mobile device.

Many clone apps also mine the personal data of OpenAI’s fast-growing userbase: ChatGPT exceeded 1 million users in less than a week of launch and interest has only grown since then.

With our extensive experience of conducting privacy analysis of mobile apps, we decided to do a deep dive into this suddenly-popular niche to identify and quantify the current level of risk associated with this new type of mobile app.

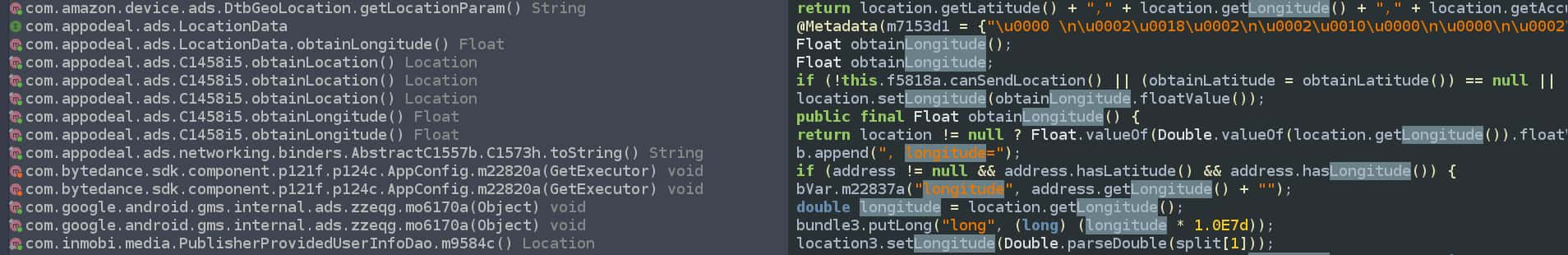

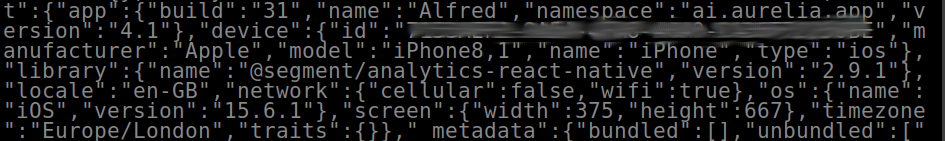

We performed a range of analyses, including the use of open source HTTPS proxy mitmproxy to capture apps’ network traffic in order to determine what personal data they were actively sharing and with whom.

We did a close analysis of all apps’ privacy policies. For iOS apps, this included privacy labels on their App Store listings. We also examined Android apps’ source code to identify risky functions and permissions.

What we found was a clutch of grubby, parasitical apps that added no value for users and instead intruded on users’ privacy to profit from their data.

Our network analysis revealed the great majority of apps to be sharing numerous datapoints about users’ devices, such as screen size or network operator, which on their own might seem innocuous but in aggregate can be used to potentially “fingerprint” devices.

While none of the apps appears to be outright malicious, we found one app to be sharing data with ByteDance, a company under a cloud when it comes to privacy and data security,[4] which should give anyone pause before installing it.

There’s also the ethical consideration of charging sometimes ludicrous amounts for access to a free product while monetizing user data. While weekly charges were typically around the $5 mark, or a not insignificant $250 over the course of a year, one app charged over $60 per month, which works out at over $730 annually.